Critical Perspectives on the Metascience Reform Movement

Few Notes on the Centre for Open Science’s Recent Symposium

The Centre for Open Science’s symposium on “Critical Perspectives on the Metascience Reform Movement” took place on 7th March 2024. It was organised by Sven Ulpts, and it includes presentations by Bart Penders, Tom Hostler, Stephan Guttinger, Sarahanne Field, Nicole Nelson, and Berna Devezer.

The symposium highlights recent work that invites us to think more carefully about how power is used and abused in metascientific collaborations; how open science workload is seen and unseen; how replications are conceived; how the metascience community is organised; and how science reforms are potentially homogenizing and may favour procedure over the actual science. Tim Errington, the COS's Senior Director of Research, introduced the presentations by explaining that this line of more critical, reflective, meta-metascience is “healthy! We want to embrace a self-scepticism enterprise as much as possible.” Well said!

You can find recordings of each presentation here:

The are also abstracts of each presentation here.

Here’s my notes on the presentations. You can also click on the times to the left of each title to go straight to each presentation.

3:01 – “Shamed Into Good Science” by Bart Penders

Bart discusses his interviews with researchers taking part in Many Labs studies and considers the power structures that have emerged within them. In discussing mechanical objectivity, he notes that:

A focus on process and the eradication of all sorts of biases from that process means that we have to focus on the weak link, and that weak link is us!....If it’s us that threatens the integrity of science, then it’s us that has to go!

He points out that a push for mechanical objectivity has been seen throughout the history of science, and it’s something that we’re also seeing in the context of metascience. But do Many Labs collectives represent the removal of the self from science or the implementation of a more social, collective self? It’s possible that, as a collective, Many Labs wield even greater social (human) power than traditional scientific projects? In this sense, it seems to me that they may entail even more human influence and bias than traditional scientific projects?

For more, see:

Penders, B. (2022). Process and bureaucracy: Scientific reform as civilisation. Bulletin of Science, Technology & Society, 42(4), 107-116. https://doi.org/10.1177/02704676221126388

34:59 – “The Invisible Workload of Open Research” by Thomas Hostler

Tom notes that “a lot of metascience is too focused on the abstract research process.” We need to think about the implementation of science reforms in the university context. “Open research takes more time than closed research.” Think about the time spent preparing preregistrations, data availability statements, transparency statements, author contribution statements, meta-data descriptions, and so on. Do universities give researchers more time to do open research? Of course not! And although each task may only take a few minutes, their multiplicity may lead to “death by a thousand 10-minute tasks!” Tom provides some particularly pernicious examples of red tape, rule redundancy, and robotic bureaucracy. He concludes that:

We need to think about the time costs of open research. We need to work together to reduce rule redundancy. We need to ‘see’ that open research takes time.

I agree! As Bart highlighted in his presentation, the activist side of metascience pushes for reforms in the context of a “moral panic.” But that same morality should motivate a concern about the welfare of our overworked scientists as well as the science itself.

For more, see…

Hostler, T. J. (2023). The invisible workload of open research. Journal of Trial & Error. https://doi.org/10.36850/mr5

1:01:23 – “Why Replication Is Underrated” by Stephan Guttinger

Stephan responds to Uljana Feest’s (2019) article “Why replication is overrated.” He agrees with her that “dedicated replication studies” are overrated. However, he argues that “inbuilt replication samples” are underrated. This second type of replication is much less recognised but much more common than dedicated replication studies. They are replications that are already built into an initial study as “micro-replications.” For example, you might recognize an inbuilt replication when researchers introduce a finding in their results with the phrase: “consistent with previous observations…” From my perspective, robustness analyses operate as a kind of inbuilt replication (see also Haig, 2022). Stephan concludes that “inbuilt replication” has a “forward-looking role” because it increases trust in the current findings as opposed to the “backward looking role” of dedicated replications, which aim to increase trust in past findings. However, in response to a question from the audience, he agrees that “forward-looking” and “backward-looking” may be somewhat misleading terminology here and there may be better ways of explaining this.

For more, see…

Guttinger, S. (2019). A new account of replication in the experimental life sciences. Philosophy of Science, 86(3), 453-471. https://doi.org/10.1086/703555

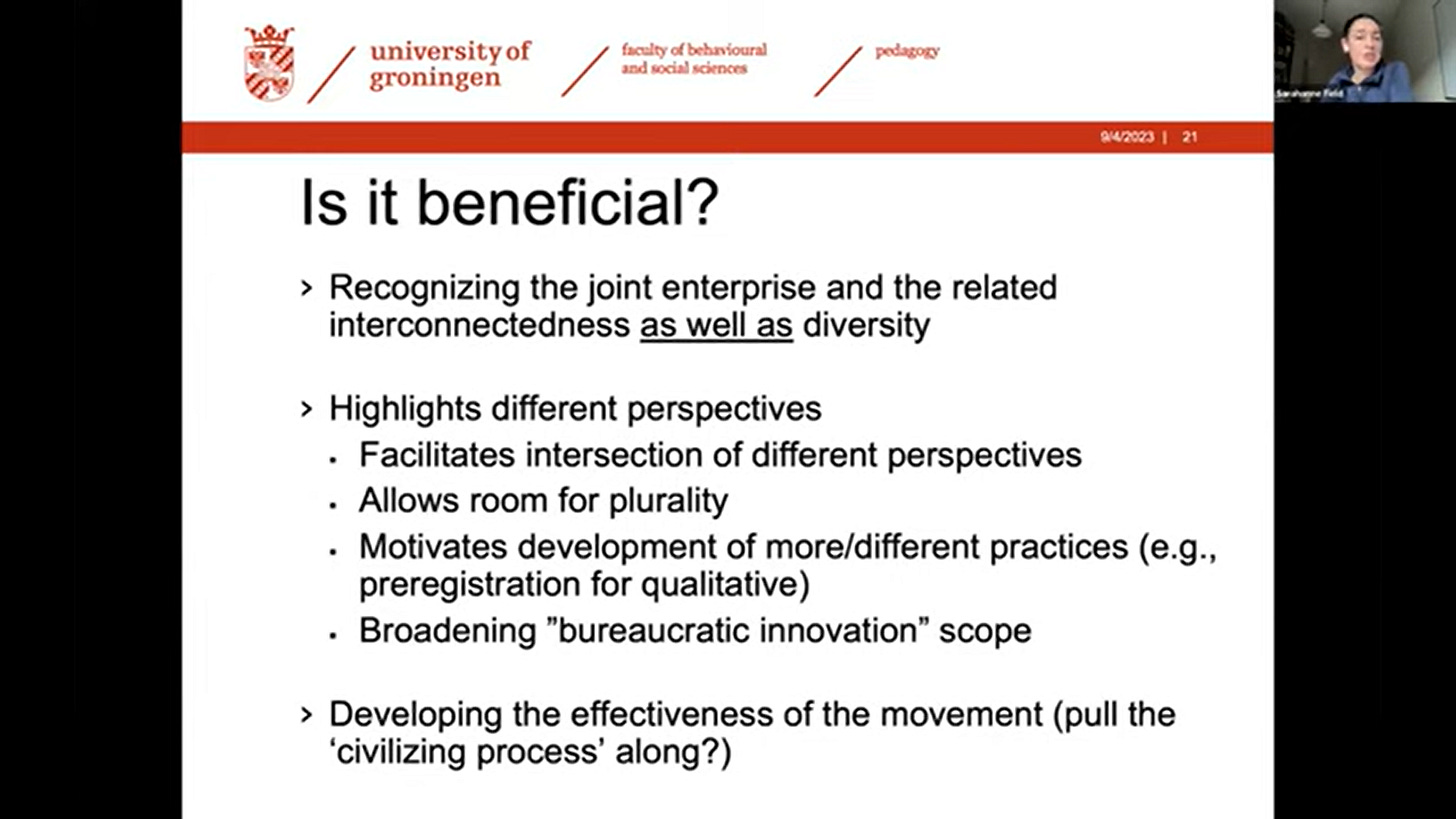

1:24:52 – “Communities of Practice in the Science Reform Movement - Recognizing and Mobilizing Diverse Contributions to Science Reform” by Sarahanne Field

Sarahanne discussed her ethnographic study of the metascience and science reform Twitter community. Her network analysis revealed that the community was not as dense as expected, and it made more sense to consider the network as a series of relatively separate subcommunities. There were four main subcommunities: (1) platforms and stats; (2) open science journals; (3) crisis of confidence people; and (4) open access and libraries. Her evidence indicates that it makes more sense to conceive metascience as a constellation of subcommunities rather than a monolith. It’s also more beneficial to conceptualise metascience in this way because it facilitates the intersection of different perspectives and allows room for plurality. Hence, as per Sarahanne’s title, this conceptualisation is better for mobilizing more diverse contributions to science reform, including, one would hope, the contributions of more critical metascientists!

For more, see…

Field, S. (2022). Charting the constellation of science reform. https://research.rug.nl/en/publications/charting-the-constellation-of-science-reform

1:49:12 – “The Decline of Scientific Individualism?” by Nicole Nelson

Nicole began her presentation by asking:

Do open science/metascience innovations reduce heterogeneity in scientific practices?

She undertakes an insightful historical analysis of previous scientific communities that evolved to use “standard organisms” (C. Elgans, rats, mice, etc.) in their investigations and highlights the diversity costs that were incurred as a result. In the same way, for example, open science checklists may not simply increase transparency, but also change practices, leading to greater research homogeneity. Is this a problem? Well, I guess homogeneity is good to the extent that the advocated research practice is good. But it also runs the risk of being bad, if the advocated research practice has some (hidden) costs or problems, which leads us nicely on to Berna’s presentation.

For related work, see…

Nelson, N. C., Chung, J., Ichikawa, K., & Malik, M. M. (2022). Psychology exceptionalism and the multiple discovery of the replication crisis. Review of General Psychology, 26(2), 184-198. https://doi.org/10.1177/10892680211046508

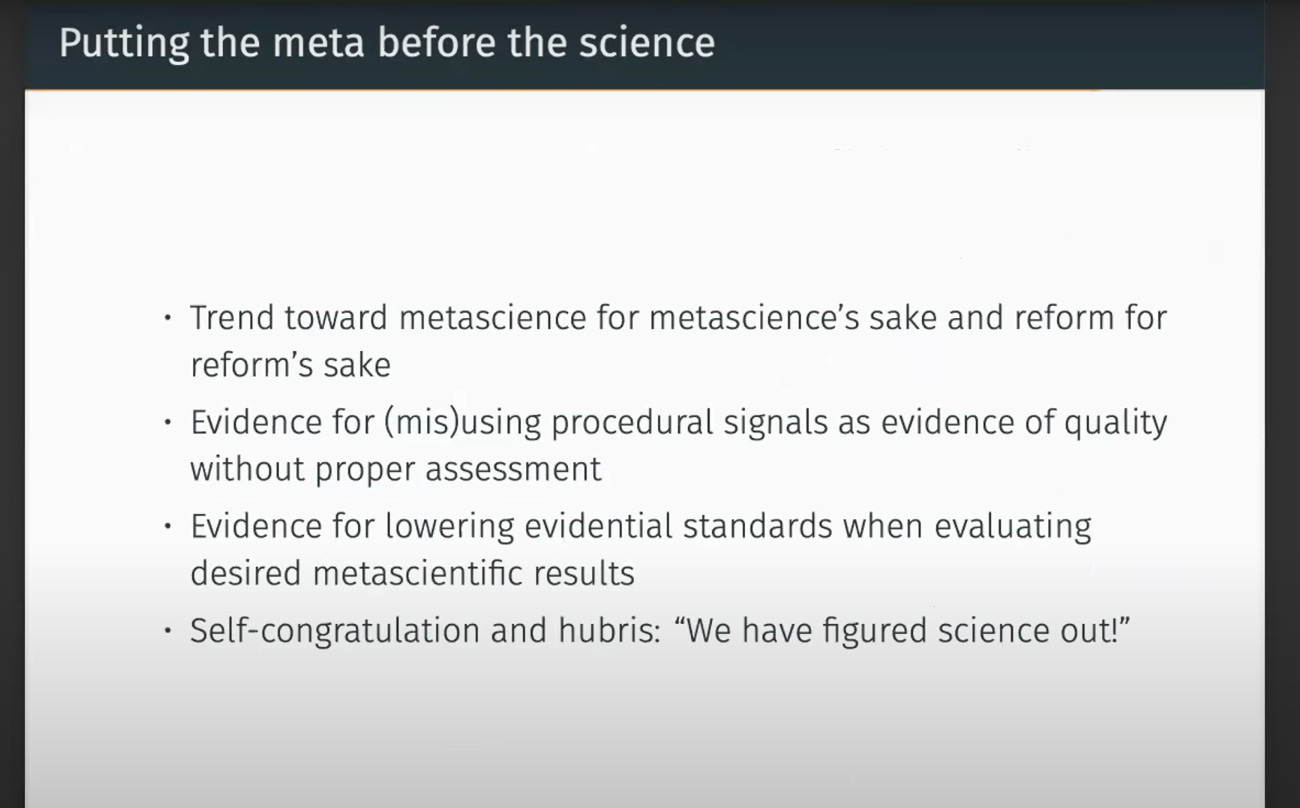

2:14:36 – “Meta Makeover: Ditching Dogmas and Raising Standards of Evidence” by Berna Devezer

Berna argues that the science reform movement has (unintentionally) prioritized “procedures” (larger samples, preventing p-hacking, preregistration, replications, open data and methods) over “the actual content of science” (better quality samples, model specification, systematic exploration, theory development, formalism). In this sense, metascience relegates science to a secondary role, literally “putting the meta before the science”! She provides several examples: Replications: “The more the merrier?” But why should we attempt to replicate scientifically flawed studies? Multisite replications: “The bigger, the better?” But often, bigger means more complex models that lead to worse inferences. Preregistration: “If it exists, it’s good!” But people sometimes use preregistration as a signal of quality, when it isn’t (especially when people don’t read them!). In addition, we should be careful about potential researcher bias in the metascience community vis-a-vis “lowering evidential standards when evaluating desired metascientific results.” She ends with a call for greater engagement with critical perspectives in this area.

For more, see…

Bak-Coleman, J. B., & Devezer, B. (2023, November 20). Causal claims about scientific rigor require rigorous causal evidence. MetaArXiv. https://doi.org/10.31234/osf.io/5u3kj