Preregistration, Severity, and Deviations

Preregistration does not improve the transparent evaluation of severity in Popper’s philosophy of science or when deviations are allowed

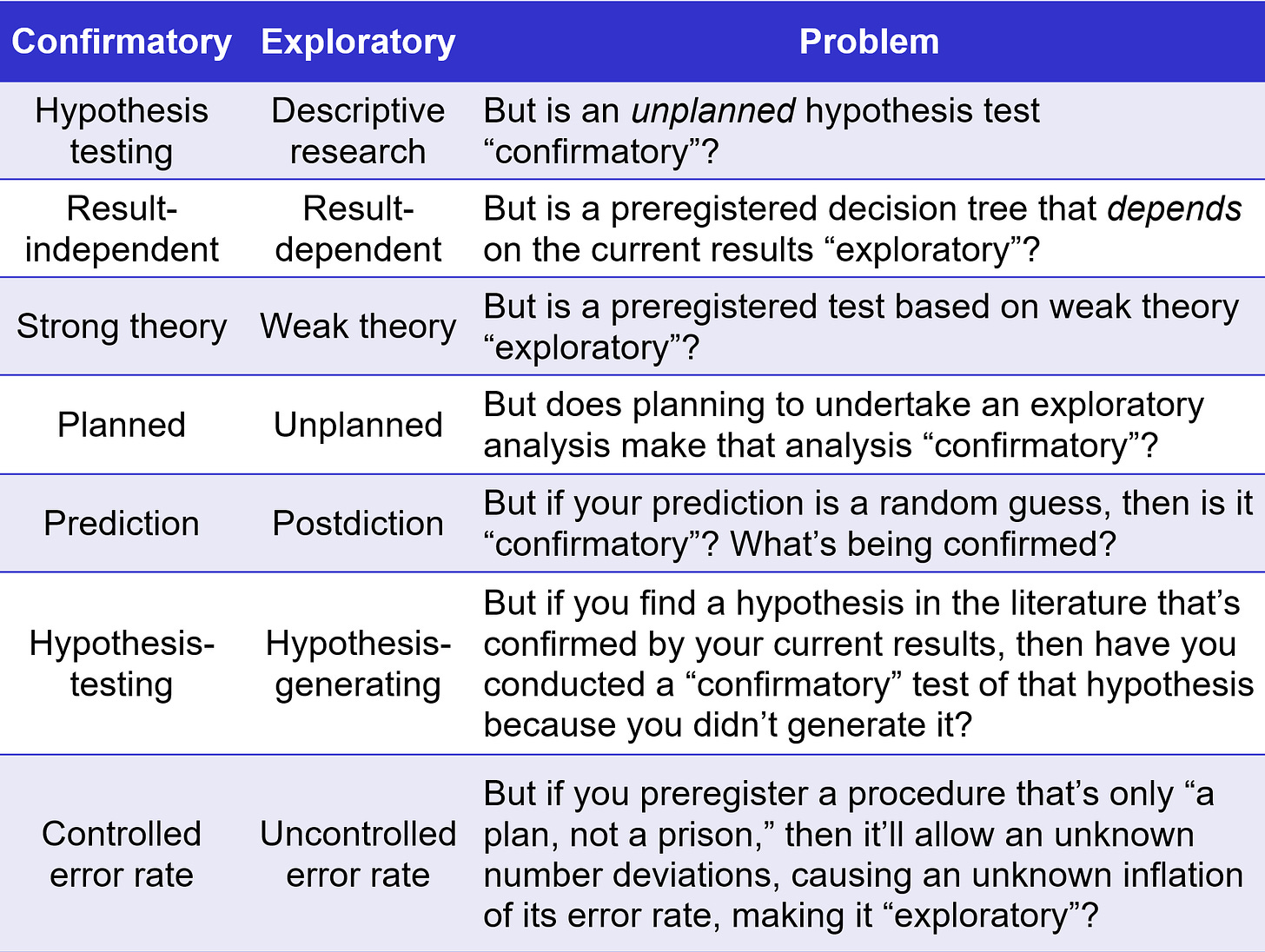

Preregistration Distinguishes Between Exploratory and Confirmatory Research?

Previous justifications for preregistration have focused on the distinction between “exploratory” and “confirmatory” research (e.g., Nosek & Lakens, 2014). However, as I discuss in this recent presentation, this distinction faces some problems. For example, the distinction does not appear to have a formal definition in either statistical theory or the philosophy of science. In addition, critics have questioned related concerns about the “double use” of data and “circular reasoning” (Devezer et al., 2021; Rubin, 2020, 2022; Rubin & Donkin, 2024; Szollosi & Donkin, 2021; see also Mayo, 1996, pp. 137, 271-275; Mayo, 2018, p. 319).

Preregistration Improves the Transparent Evaluation of Severity

Recently, Lakens and colleagues have provided a more coherent justification for preregistration based on Mayo’s (1996, 2018) error statistical approach (Lakens, 2019, 2024; Lakens et al., 2024; see also Vize et al., 2024). Specifically, Lakens (2019) argues that “preregistration has the goal to allow others to transparently evaluate the capacity of a test to falsify a prediction, or the severity of a test” (p. 221).

A hypothesis passes a severe test when there is a high probability that it would not have passed, or passed so well, if it was false (Mayo, 1996, 2018). A test procedure’s error probabilities play an important role in evaluating severity. In particular, “pre-data, the choices for the type I and II errors reflect the goal of ensuring the test is capable of licensing given inferences severely” (Mayo & Spanos, 2006, p. 350). For example, a test procedure with a nominal pre-data Type I error rate of α = 0.05 is capable of licensing specific inferences with a minimum “worst case” severity of 0.95 (i.e., 1 – α; Mayo, 1996, p. 399).

Importantly, “biasing selection effects” in the experimental testing context (e.g., p-hacking) can lower the capability of a test procedure to license inferences severely by increasing the error probability with which the procedure passes hypotheses. From this error statistical perspective, preregistration allows a more transparent evaluation of the capability of a test procedure to perform severe tests. In particular, preregistration reveals a researcher’s planned hypotheses, methods, and analyses and enables a comparison with their reported hypotheses, methods, and analyses in order to identify any biasing selection effects in the experimental testing context that may increase the test procedure’s error probabilities and lower its capability for severe tests.

What Type of Severity?

Mayo’s (1996, 2018) error statistical conceptualization of severity is not the only one out there! Several other types of severity have been proposed by Bandyopadhyay and Brittan (2006), Hellman (1997, p. 198), Hitchcock and Sober (2004, pp. 23-25), Horwich (1982, p. 105), Lakatos (1968, p. 382), Laudan (1997, p. 314), Popper (1962, 1983), and van Dongen et al. (2023). Furthermore, preregistration may not facilitate the transparent evaluation of these other types of severity. In my recent article, I illustrate this point by showing that, although preregistration can facilitate the transparent evaluation of Mayoian severity, it does not improve the transparent evaluation of Popperian severity.

According to Popper, a researcher’s private, biased, psychological reasons for conducting and reporting their specific test (e.g., because their previous tests did not yield a significant result) and for constructing their specific hypothesis (e.g., because it was passed by the current result) belong to a “World 2” of subjective beliefs, intentions, and experiences (i.e., the context of discovery). In contrast, Popper’s deductive method of hypothesis testing refers to researchers’ formal, public specifications in a “World 3” of objective problems, theories, and reasons (i.e., the context of justification). Consequently, a researcher’s hidden, unreported “biasing selection effects” do not enter into an evaluation of Popperian severity because they are part of World 2, and “science is part of world 3, and not of world 2” (Popper, 1974b, p. 1148). Hence, a perfectly valid measurement of Popperian severity can be made using a potentially p-hacked result and a potentially HARKed hypothesis, because secretive p-hacking and HARKing occur in World 2, not World 3.

Popper also proposed a “requirement of sincerity” that the evidence for a hypothesis must be the result of a genuine and sincere attempt to refute it (e.g., Popper, 1983, p. 235). Importantly, however, he was clear that sincerity “is not meant in a psychologistic sense” (Popper, 1974b, p. 1080). Hence, again, Popperian “sincerity” is unaffected by biasing selection effects in World 2. Instead, sincerity is evaluated via a critical rational discussion of the objective, public theories and reasoning associated with a hypothesis test in World 3. As Popper (1974b) explained:

“I have always tried to show that sincerity in the subjective sense is not required, thanks to the social character of science which has (so far, perhaps no further) guaranteed its objectivity.” (p. 1080).

Of course, individual scientists are biased! However, as Popper (1994, p. 7) explained, “it need not create a great obstacle to science if the individual scientist is biased in favour of a pet theory,” because science proceeds on the basis of the collective criticism of World 3 arguments and theories by other scientists. Hence, “if you are biased in favour of your pet theory, some of your friends and colleagues (or failing these, some workers of the next generation) will be eager to criticize your work – that is to say, to refute your pet theories if they can” (Popper, 1994, p. 93).

In summary, preregistration does not facilitate the transparent evaluation of either Popperian severity or sincerity because neither evaluation requires knowledge of the researcher’s planned approach or unreported biasing selection effects. Instead, what’s important is (a) a well-conducted critical discussion about the objective, publicly-available information associated with a hypothesis test and (b) additional critical scrutiny of that hypothesis in the future (Popper, 1966, p. 218; Popper, 1974a, p. 22; Popper, 1983, p. 48; Popper, 1994, p. 93).

Preregistration When Deviations are Allowed

I also argue that preregistration does not improve the transparent evaluation of Mayoian severity when the associated test procedure allows deviations in its implementation. In other words, if a preregistered procedure is only treated as a “plan, not a prison” (Chambers, 2019, pp. 188, 189; DeHaven, 2017; Nosek et al., 2019, p. 817), then it will not provide a more transparent evaluation of severity than a non-preregistered procedure (for related points, see Devezer et al., 2021, p. 17; Navarro, 2020, p. 8).

To illustrate, I consider deviations that are intended to maintain or increase the validity of a test procedure in light of unexpected issues that arise in particular samples of data. For example, a researcher may use a preregistered Student’s t-test when the assumption of homogeneity is met in one sample. However, they may deviate from their plan and use Welch’s t-test when this assumption is unexpectedly violated in another sample, because Welch’s test provides a more valid approach in this situation (Lakens, 2024, p. 8).

A test procedure that allows these sample-based validity-enhancing deviations in its implementation will suffer an unknown inflation of its Type I error rate due to the forking paths problem (Gelman & Loken, 2013, 2014; Rubin, 2017). A forking path occurs when a researcher allows information from their sample to determine which test they conduct on that sample. The problem is that, in a hypothetical long run of random sampling, this approach results in different tests being used to test the same joint intersection null hypothesis (e.g., Student’s t-test & Welch’s t-test in the previous example). Hence, forking paths produce multiple tests in a hypothetical long run of sampling, and these multiple tests inflate the test procedure’s Type I error rate.

A single sample-based validity-enhancing deviation opens up a single forking path. However, during a hypothetical long run of random sampling, different samples may require many different validity-enhancing deviations, leading to an unknown inflation of the test procedure’s Type I error rate and, consequently, an unknown reduction of its capability to license inferences with Mayoian severity (for related points, see Ditroilo et al., 2025, p. 1111; Mayo, 1996, pp. 313-314; Mayo, 2018, pp. 200-201; Nosek & Lakens, 2014, p. 138; Nosek et al., 2018, p. 2601; Nosek et al., 2019, p. 816; Staley, 2002, p. 289).

Hence, if preregistration is treated as an adjustable plan, rather than a fixed test procedure (or “prison”), then it will not facilitate the transparent evaluation of Mayoian severity, because adjustable plans do not control Type I error rates.

I conclude that preregistration does not improve the transparent evaluation of severity (a) in Popper’s philosophy of science or (b) in Mayo’s error statistical approach when deviations are allowed.

The Article

Rubin, M. (2025). Preregistration does not improve the transparent evaluation of severity in Popper’s philosophy of science or when deviations are allowed. Synthese, 206, Article 111. https://doi.org/10.1007/s11229-025-05191-4

“Deep Dive” Podcast

Listen to a 22-minute AI-generated podcast summary of my article here.

References

Bandyopadhyay, P. S., & Brittan, G. G. (2006). Acceptibility, evidence, and severity. Synthese, 148, 259-293. https://doi.org/10.1007/s11229-004-6222-6

Chambers, C. (2019). What’s next for registered reports? Nature, 573(7773), 187-189. https://doi.org/10.1038/d41586-019-02674-6

DeHaven, A. (2017, May 23rd). Preregistration: A plan, not a prison. Centre for Open Science. https://www.cos.io/blog/preregistration-plan-not-prison

Devezer, B., Navarro, D. J., Vandekerckhove, J., & Buzbas, E. O. (2021). The case for formal methodology in scientific reform. Royal Society Open Science, 8(3). https://doi.org/10.1098/rsos.200805

Ditroilo, M., Mesquida, C., Abt, G., & Lakens, D. (2025). Exploratory research in sport and exercise science: Perceptions, challenges, and recommendations. Journal of Sports Sciences, 43(12), 1108–1120. https://doi.org/10.1080/02640414.2025.2486871

Gelman, A., & Loken, E. (2013). The garden of forking paths: Why multiple comparisons can be a problem, even when there is no “fishing expedition” or “p-hacking” and the research hypothesis was posited ahead of time. Department of Statistics, Columbia University. Retrieved from http://www.stat.columbia.edu/~gelman/research/unpublished/p_hacking.pdf

Gelman, A., & Loken, E. (2014). The statistical crisis in science. American Scientist, 102, 460. http://dx.doi.org/10.1511/2014.111.460

Devezer, B., Navarro, D. J., Vandekerckhove, J., & Ozge Buzbas, E. (2021). The case for formal methodology in scientific reform. Royal Society Open Science, 8(3). https://doi.org/10.1098/rsos.200805

Hellman, G. (1997). Bayes and beyond. Philosophy of Science, 64, 191–221. https://doi.org/10.1086/392548

Hitchcock, C., & Sober, E. (2004). Prediction versus accommodation and the risk of overfitting. British Journal for the Philosophy of Science, 55(1), 1-34. https://doi.org/10.1093/bjps/55.1.1

Horwich, P. (1982). Probability and evidence. Cambridge University Press.

Lakatos, I. (1968). Changes in the problem of inductive logic. Studies in Logic and the Foundations of Mathematics, 51, 315-417. https://doi.org/10.1016/S0049-237X(08)71048-6

Lakens, D. (2019). The value of preregistration for psychological science: A conceptual analysis. Japanese Psychological Review, 62(3), 221–230. https://doi.org/10.24602/sjpr.62.3_221

Lakens, D. (2024). When and how to deviate from a preregistration. Collabra: Psychology, 10(1): 117094. https://doi.org/10.1525/collabra.117094

Lakens, D., Mesquida, C., Rasti, S., & Ditroilo, M. (2024). The benefits of preregistration and Registered Reports. Evidence-Based Toxicology, 2:1, Article 2376046, https://doi.org/10.1080/2833373X.2024.2376046

Laudan, L. (1997). How about bust? Factoring explanatory power back into theory evaluation. Philosophy of Science, 64(2), 306-316. https://doi.org/10.1086/392553

Mayo, D. G. (1996). Error and the growth of experimental knowledge. University of Chicago Press.

Mayo, D. G. (2018). Statistical inference as severe testing: How to get beyond the statistics wars. Cambridge University Press.

Mayo, D. G., & Spanos, A. (2006). Severe testing as a basic concept in a Neyman–Pearson philosophy of induction. The British Journal for the Philosophy of Science, 57(2), 323-357. https://doi.org/10.1093/bjps/axl003

Navarro, D. J. (2020). Paths in strange spaces: A comment on preregistration. PsyArXiv. https://doi.org/10.31234/osf.io/wxn58

Nosek, B. A., & Lakens, D. (2014). Registered reports. Social Psychology, 45(3), 137–141. https://doi.org/10.1027/1864-9335/a000192

Nosek, B. A., Beck, E. D., Campbell, L., Flake, J. K., Hardwicke, T. E., Mellor, D. T., van ’t Veer, A. E., & Vazire, S. (2019). Preregistration is hard, and worthwhile. Trends in Cognitive Sciences, 23(10), 815–818. https://doi.org/10.1016/j.tics.2019.07.009

Nosek, B. A., Ebersole, C. R., DeHaven, A. C., & Mellor, D. T. (2018). The preregistration revolution. Proceedings of the National Academy of Sciences, 115, 2600-2606. http://dx.doi.org/10.1073/pnas.1708274114

Popper, K. R. (1962). Conjectures and refutations: The growth of scientific knowledge. Routledge.

Popper, K. R. (1966a). The open society and its enemies (5th ed.). Routledge.

Popper, K. R. (1974a). Objective knowledge: An evolutionary approach. Oxford University Press.

Popper, K. R. (1974b). Reply to my critics. In P. A. Schilpp (Ed.), The philosophy of Karl Popper (Book II) (pp. 960-1197). Open Court.

Popper, K. R. (1983). Realism and the aim of science: From the postscript to the logic of scientific discovery. Routledge.

Popper, K. R. (1994). The myth of the framework: In defence of science and rationality. Psychology Press.

Rubin, M. (2017). An evaluation of four solutions to the forking paths problem: Adjusted alpha, preregistration, sensitivity analyses, and abandoning the Neyman-Pearson approach. Review of General Psychology, 21(4), 321-329. https://doi.org/10.1037/gpr0000135

Rubin, M. (2020). Does preregistration improve the credibility of research findings? The Quantitative Methods for Psychology, 16(4), 376–390. https://doi.org/10.20982/tqmp.16.4.p376

Rubin, M. (2022). The costs of HARKing. British Journal for the Philosophy of Science, 73(2), 535-560. https://doi.org/10.1093/bjps/axz050

Rubin, M. (2024). Inconsistent multiple testing corrections: The fallacy of using family-based error rates to make inferences about individual hypotheses. Methods in Psychology, 10, Article 100140. https://doi.org/10.1016/j.metip.2024.100140

Rubin, M., & Donkin, C. (2024). Exploratory hypothesis tests can be more compelling than confirmatory hypothesis tests. Philosophical Psychology, 37(8), 2019-2047. https://doi.org/10.1080/09515089.2022.2113771

Staley, K. W. (2002). What experiment did we just do? Counterfactual error statistics and uncertainties about the reference class. Philosophy of Science, 69(2), 279-299. https://doi.org/10.1086/341054

Szollosi, A., & Donkin, C. (2021). Arrested theory development: The misguided distinction between exploratory and confirmatory research. Perspectives on Psychological Science, 16(4), 717-724. https://doi.org/10.1177/1745691620966796

van Dongen, N., Sprenger, J., & Wagenmakers, E. J. (2023). A Bayesian perspective on severity: Risky predictions and specific hypotheses. Psychonomic Bulletin & Review, 30(2), 516-533. https://doi.org/10.3758/s13423-022-02069-1

Vize, C., Lynam, D., Miller, J., & Phillips, N. L. (2024, May 28). On the use and misuses of preregistration: A reply to Klonsky (2024). PsyArXiv. https://doi.org/10.31234/osf.io/g7dn2